Insane Metal

Member

I need independent benches.

It's so hard to believe that AMD is competing on the high end. Lol.

It's so hard to believe that AMD is competing on the high end. Lol.

I need independent benches.

It's so hard to believe that AMD is competing on the high end. Lol.

I could see some of the values they presented as bogus or 'best case', but they presented so many bench numbers that I can't see AMD being so disingenuous that they'd be willing to be untruthful in the whole affair. You know there are going to be a TON of people reviewing these new cards, it behooves AMD to be as accurate as possible, if even to say "we told you so" since it's been so long since Radeon has been competitive at high end.I need independent benches.

It's so hard to believe that AMD is competing on the high end. Lol.

Heavy favorited games by AMD also people should stop testing the 5000 + 6000 combination. Zero people have that as of now and barely anybody will be upgrading towards it. It's completely useless for 99% of the people out there and also heavily limited to certain games.

Showcase me some real games people play like metro exodus / cyberpunk / cod / ac games / red dead redemption etc.

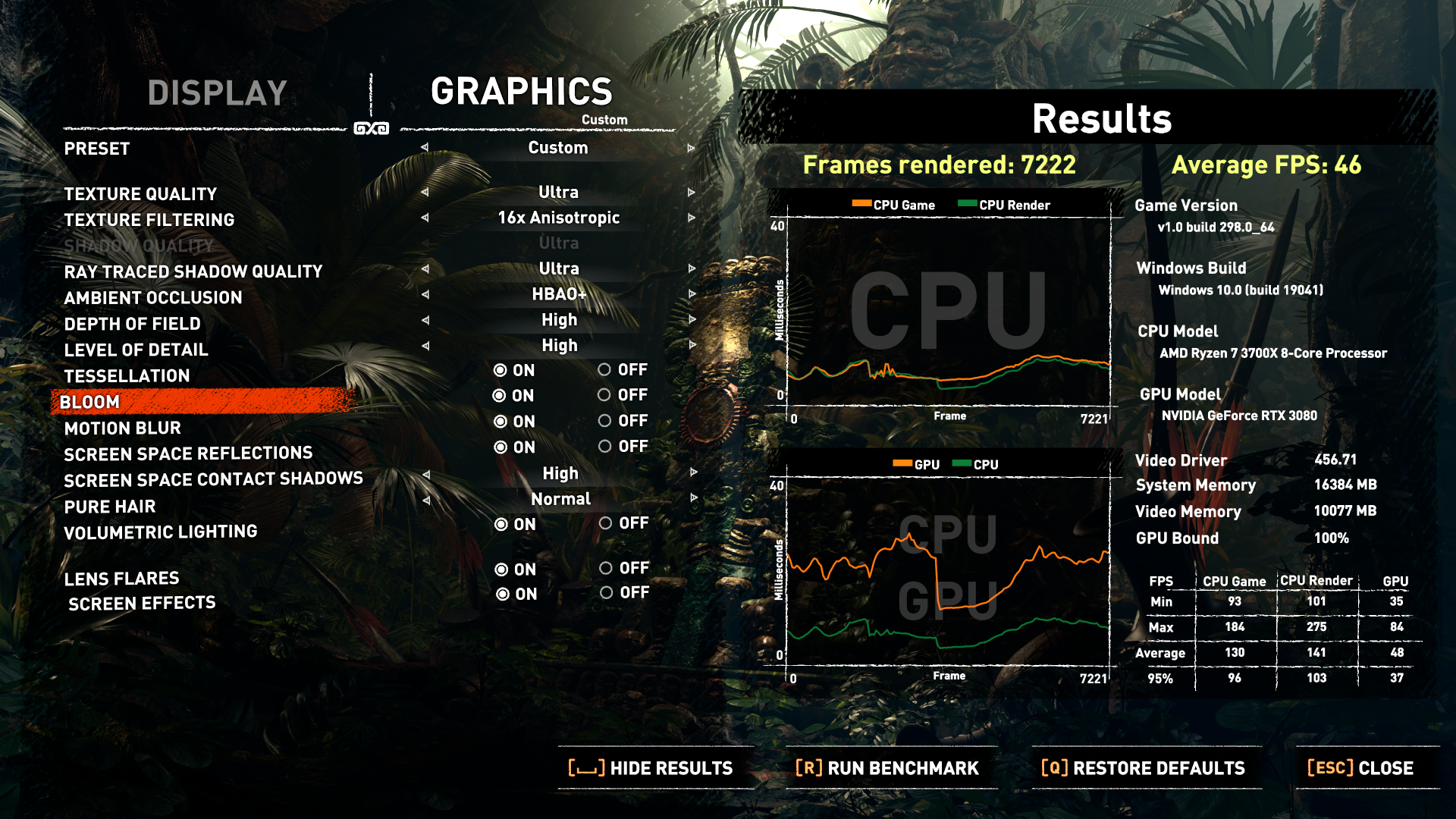

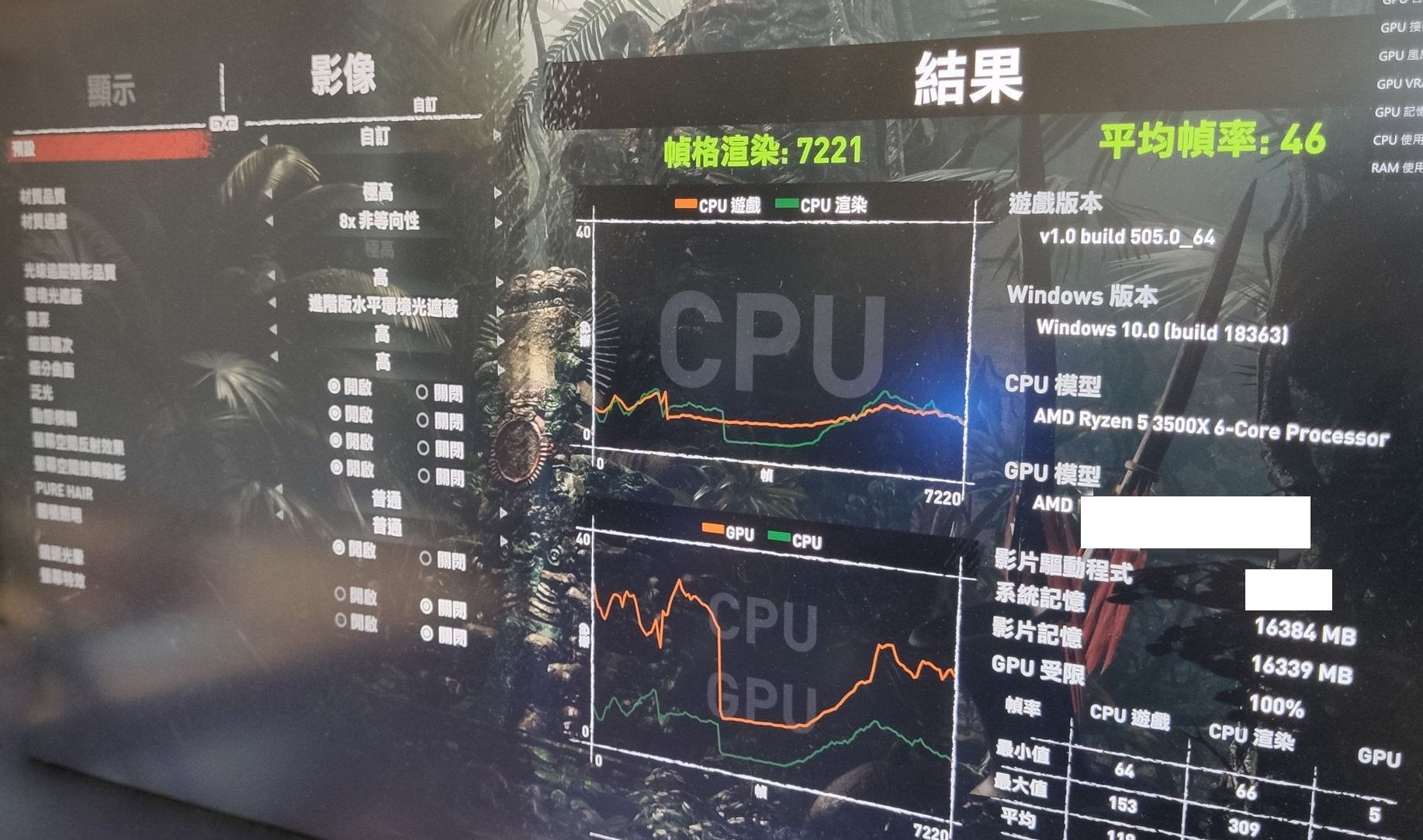

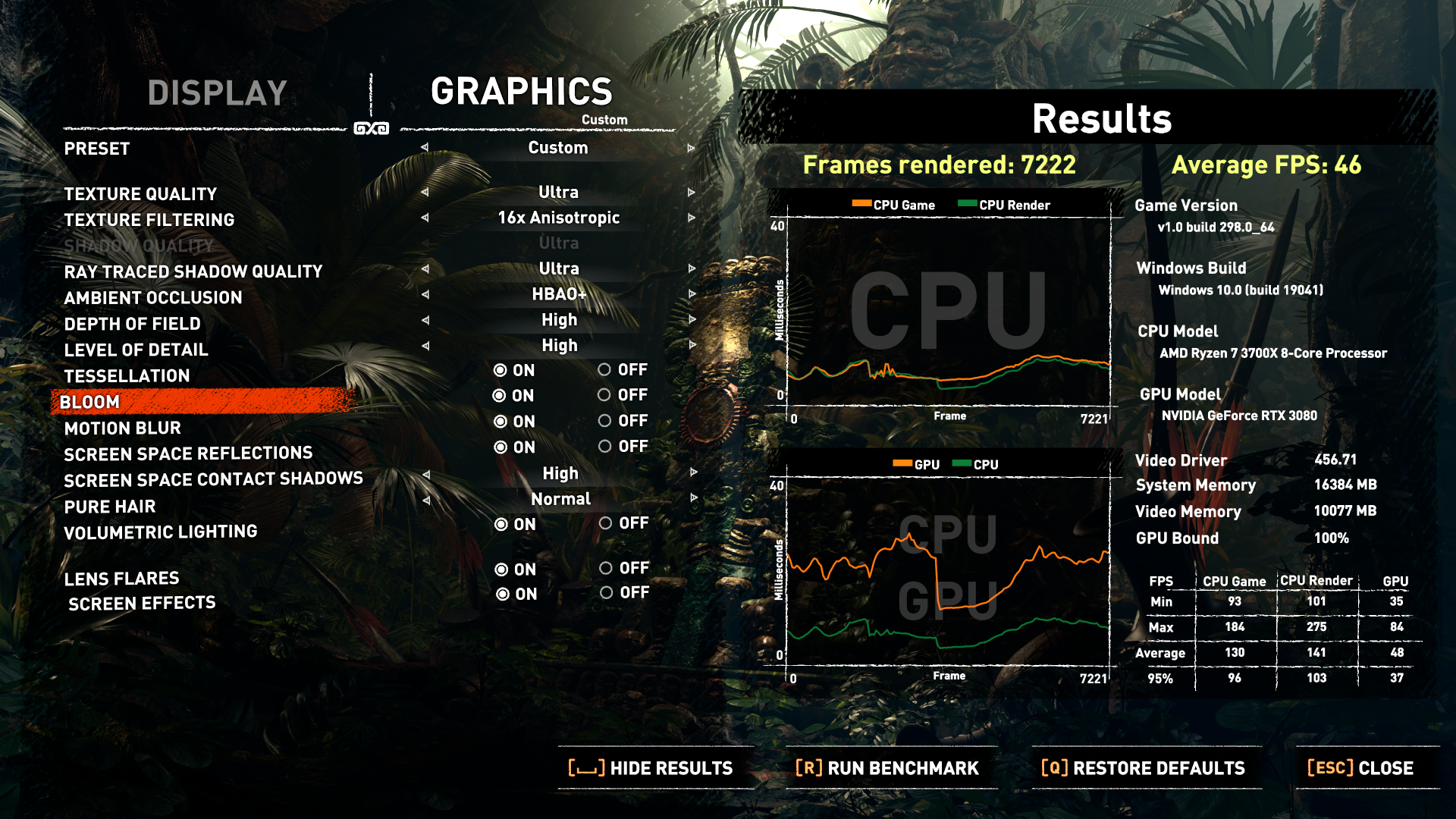

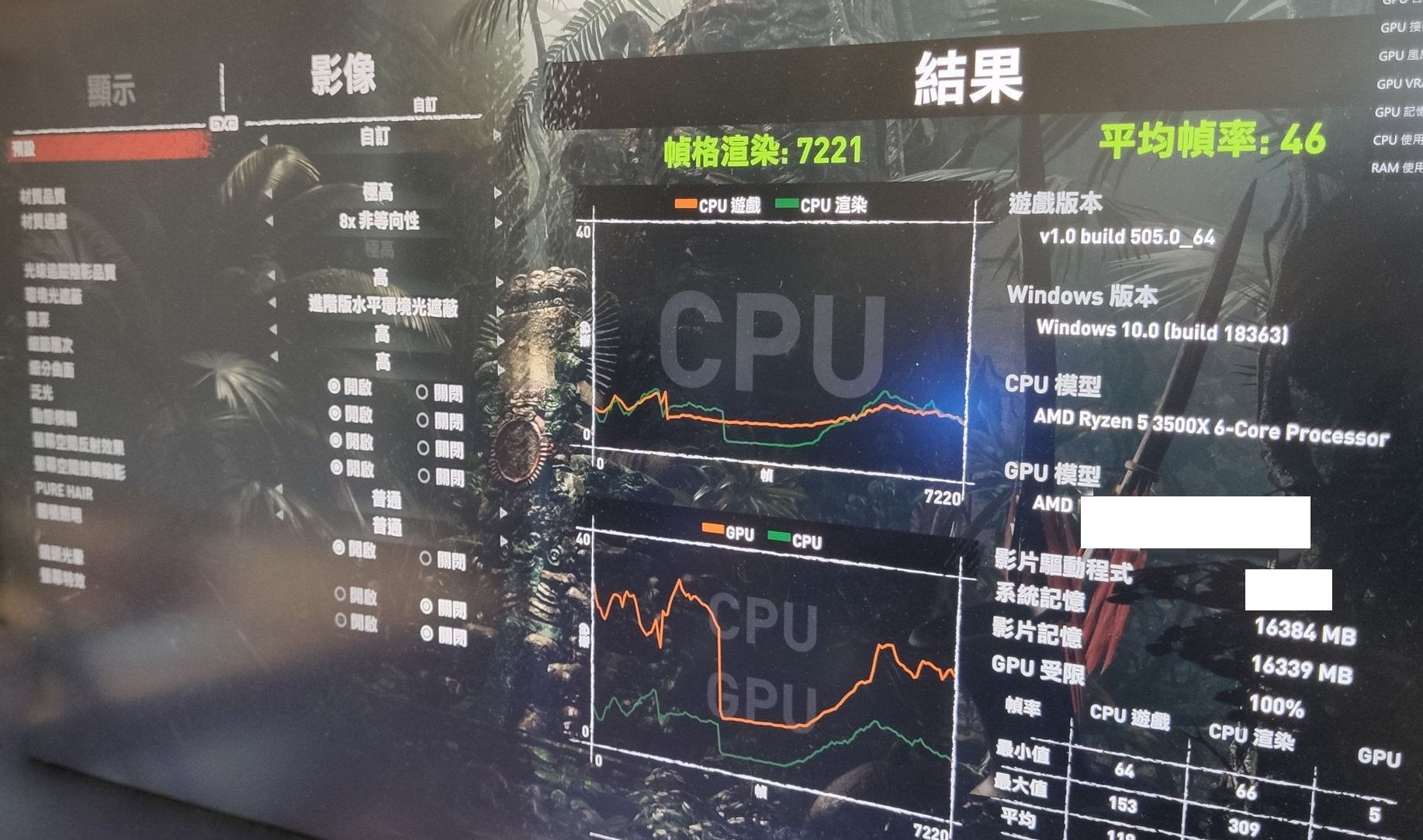

AMD has released their benchmark results as part of a benchmark tool:

Note that SAM is enabled for these.

I sold my whole gaming PC a couple months ago and am currently debating to build a new one or go console. The idea of an all-AMD build to synergize 5000 CPU and 6000 GPU is incredibly relevant to the decision I'll make in the coming weeks.

Heavy favorited games by AMD also people should stop testing the 5000 + 6000 combination. Zero people have that as of now and barely anybody will be upgrading towards it. It's completely useless for 99% of the people out there and also heavily limited to certain games.

Showcase me some real games people play like metro exodus / cyberpunk / cod / ac games / red dead redemption etc.

Dunno what that means but it sounds objective. I like it.I believe amd make a compromise with the memory subsystem.

Let's say infinity cache (128mb) takes 128 mm of die and the 256bit gddr6 controllers takes another 64mm, total 192mm of 505mm.

If amd used the same memory subsystem of vega II (only 40mm) 16gb 1TB/s was far the better choice.

But we know cost/availability..

This is the dumbest post i ever read. Barely anyone will upgrade to zen 3? Ok, talk about being delusional.Heavy favorited games by AMD also people should stop testing the 5000 + 6000 combination. Zero people have that as of now and barely anybody will be upgrading towards it. It's completely useless for 99% of the people out there and also heavily limited to certain games.

Showcase me some real games people play like metro exodus / cyberpunk / cod / ac games / red dead redemption etc.

Warring aside he left some retarded comments earlier in AMD (and Ryzen?) threads. He's usually cool but right now... unhinged.This is the dumbest post i ever read. Barely anyone will upgrade to zen 3? Ok, talk about being delusional.

That's probably not feasible, I'd save the cost&time to return shit. Buy AMD GFX next time round dut. Stick with AMD processors though or I'll shit on your porch decorations.I'm literally getting a new 5900 CPU and depending on upcoming benchmarks in full HD and VR games I'll either go with amd or Nvidia. If AMD is better, I'll sell my 3080 and use the profit to offset maybe the 6900XT to 3080FE prices.

RX 6800 seems overpriced.

Yea, though the 6800XT seems the best deal to mevs 3070 that people were orgastic about:

16% higher price

for

18% higher perf

+8GB VRAM

and bettter perf/watt

But if it was 499$vs 3070 that people were orgastic about:

16% higher price

for

18% higher perf

+8GB VRAM

and bettter perf/watt

Yep and the 3700 has the GDDR6 VRAM as well.vs 3070 that people were orgastic about:

16% higher price

for

18% higher perf

+8GB VRAM

and bettter perf/watt

vs 3070 that people were orgastic about:

16% higher price

for

18% higher perf

+8GB VRAM

and bettter perf/watt

Who is ray and why are we tracing himSeems silly to go with a 3080 over 6800xt at this point.

Need to seem some ray tracing enabled benchmarks.

Ray Manuél and he ran off with my light bulbsWho is ray and why are we tracing him

he dun it againRay Manuél and he ran off with my light bulbs

Rtx performance won't really matter, if their super resolution DLSS equivalent is solid.The RT performance is going to be the do/die deal here. If AMD cards are significantly less (~40%) in performance then it's a no brainer to go with Nvidia. I can't get a card that's great at rasterization + 16G VRAM but has very slow RT performance for the next 3yrs. That's a waste of money in my book.

I mean, the 5700 XT is already more or less on par with the 2070 Super, which itself is about 30% slower than the 2080 Ti, and the 6900 XT is about double a 5700 XTI need independent benches.

It's so hard to believe that AMD is competing on the high end. Lol.

Rtx performance won't really matter, if their super resolution DLSS equivalent is solid.

You love RT for some reason. I don't know why, apart from it being something good in the future. And with 3080/3090 performance we know it to be the far future.The RT performance is going to be the do/die deal here. If AMD cards are significantly less (~40%) in performance then it's a no brainer to go with Nvidia. I can't get a card that's great at rasterization + 16G VRAM but has very slow RT performance for the next 3yrs. That's a waste of money in my book.

I don't think it's very surprising for people into their graphics tech to be into RT. It's the future and it's awesome but It won't sway me completely to the green team considering the general price/performance and it's SO damn early in the RT game.You love RT for some reason. I don't know why, apart from it being something good in the future. And with 3080/3090 performance we know it to be the far future.

I believe amd make a compromise with the memory subsystem.

Let's say infinity cache (128mb) takes 128 mm of die and the 256bit gddr6 controllers takes another 64mm, total 192mm of 505mm.

If amd used the same memory subsystem of vega II (only 40mm) 16gb 1TB/s was far the better choice.

But we know cost/availability..

Anandtech said:...the amount of die space they have to be devoting to the Infinity Cache is significant. So this is a major architectural trade-off for the company.

But AMD isn't just spending transistors on cache for the sake of it; there are several major advantages to having a large, on-chip cache, even in a GPU. As far as perf-per-watt goes, the cache further improves RDNA2’s energy efficiency by reducing the amount of traffic that has to go to energy-expensive VRAM. It also allows AMD to get away with a smaller memory subsystem with fewer DRAM chips and fewer memory controllers, reducing the power consumed there. Along these lines, AMD justifies the use of the cache in part by comparing the power costs of the cache versus a 384-bit memory bus configuration. Here a 256-bit bus with an Infinity Cache only consumes 90% of the power of a 384-bit solution, all the while delivering more than twice the peak bandwidth.

Furthermore, according to AMD the cache improves the amount of real-world work achieved per clock cycle on the GPU, presumably by allowing the GPU to more quickly fetch data rather than having to wait around for it to come in from VRAM. And finally, the Infinity Cache is also a big factor in AMD’s ray tracing accelerator cores, which keep parts of their significant BVH scene data in the cache

Do you think you will be able to easily acquire a 6900XT and sell a used 3080 for significantly more than it cost in 6 weeks?I'm literally getting a new 5900 CPU and depending on upcoming benchmarks in full HD and VR games I'll either go with amd or Nvidia. If AMD is better, I'll sell my 3080 and use the profit to offset maybe the 6900XT to 3080FE prices.

You love RT for some reason. I don't know why, apart from it being something good in the future. And with 3080/3090 performance we know it to be the far future.

You will remember that I asked you before why this is being pushed so hard and I understood that it's the way forward. I agree but performance isn't great, it should be better for me to accept it.Because I've worked with RT for several years and it gives the best rendering results. It's just that simple.

I think it's just too slow right now, and more of a "teaser" feature. Kinda like the early days of DX11 GPUsBecause I've worked with RT for several years and it gives the best rendering results. It's just that simple.

You will remember that I asked you before why this is being pushed so hard and I understood that it's the way forward. I agree but performance isn't great, it should be better for me to accept it.

As is? I prefer the frames.

Infinity cache + 256bit vs 384bit sure better tdp/latency/4gb more/easier reduction in future nodes for only ~ 100mmYou're right it will take a significant portion of die space but I don't think compromise is the right word:

Yeah, why are they tracing me?Who is ray and why are we tracing him

Looks like the reference model.... Still waiting for their pulse and nitro models.

In summary:

1) no dedicated hardware ray tracing

2) no DLSS support

3) basically the same price and efficiency for roughly the same performance.

And people are celebrating? Cmon guys, NVIDIA is still clear leader and much more future-proof solution going forward.

Any news on clock speed for these cards?

done a quick SOTR raytraced shadows bench maxed at 4k on my rtx3080:

vs.

rx 6800 (presumably)

So the 6800 is about the same as the 3080? And not just the 3070?not sure if the settings are the same. mine should be maxed. done that in a bit of a hurry.

According to the Twitter account the raytracing quality was high, not Ultra.not sure if the settings are the same. mine should be maxed. done that in a bit of a hurry.